Key Points

- Data-driven decision-making has become an essential aspect of modern business for many industries and organizations of all sizes

- ETL pipelines are essential for properly handling data integration from various sources, allowing businesses to analyze and extract value from data

- ETL testing is vital to ensure the integrity and accuracy of any data that passes through an ETL pipeline

- AI will undoubtedly play a huge role in future ETL testing, helping to automate tasks, identify patterns, and predict issues before they occur

At a time when we’re generating more than 300 million terabytes of data every day, organizations of all sizes rely heavily on data to extract hidden insights, create business strategies, and gain a competitive edge.

With an increasing volume of data from sources like web apps, social media, IoT, and internal systems, collecting, integrating, and analyzing these disparate data streams can be challenging. Moreover, these often require formatting, cleansing, and modification before they can be effectively stored and used for analysis.

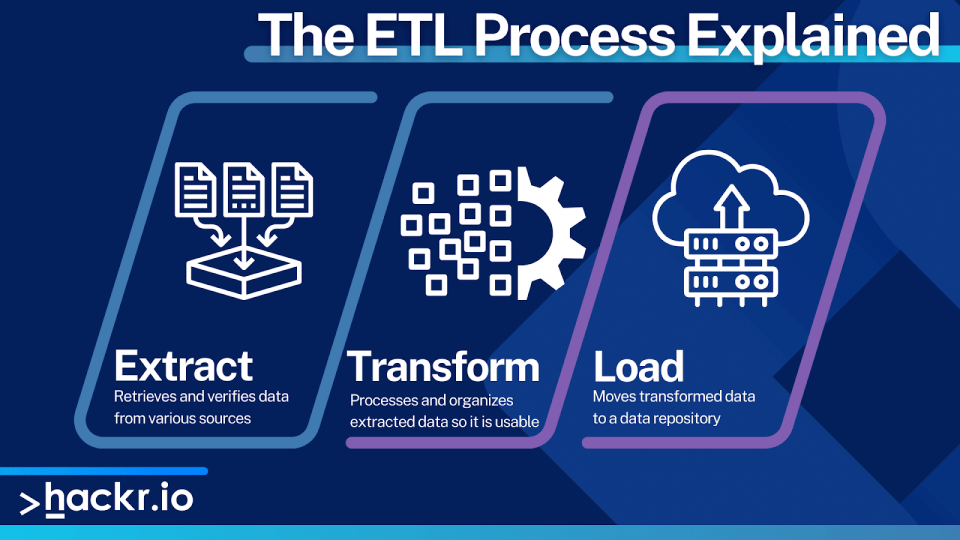

That’s where an ETL (Extract, Transform, Load) pipeline comes in, as it can be used to extract data from multiple sources, transform it based on requirements, and load the result to a target data repository for analysis, reporting, and decision-making. If you’re interested in learning data engineering, expect to learn about ETL.

But how can data engineers ensure that an ETL pipeline handles data in an accurate, consistent, and reliable way? That’s where ETL testing comes in.

ETL testing is a type of software testing that’s used to verify data is correctly extracted from source systems, transformed according to requirements, and loaded into the target data repository without loss, corruption, or inconsistencies.

The importance of ETL testing really cannot be overstated. Without quality data, it’s impossible to conduct meaningful analysis or reporting, two areas that are essential for organizations to make informed decisions, identify opportunities, and address challenges.

Let’s take a deeper dive into the world of ETL testing, including the ETL process and the future impact of AI on ETL testing.

An Overview of ETL

As organizations become increasingly dependent on data to drive their strategies and operations, the need for robust, scalable, and efficient ETL processes becomes more pressing.

That said, the complexity of managing and integrating vast amounts of structured and unstructured data from diverse sources has made well-designed ETL pipelines a necessity.

As a complement to these pipelines, rigorous testing procedures are also essential to ensure data quality is maintained throughout the entire data integration process.

In general, ETL involves gathering data from various sources and converting it to a standard format. The goal is to make data available for deep analysis and reporting, which in turn allows businesses to find insights and make well-informed decisions.

In terms of the actual ETL process, transformation occurs within a dedicated engine, frequently using temporary tables to hold data before loading it to the final destination. Data transformation can also involve multiple data operations, like filtering, organizing, consolidating, merging, cleansing, removing duplicates, and verification.

Let’s take a closer look at the general ETL process:

- Extract: The first step is extracting raw data from various sources, such as databases, flat files, APIs, or web services. This requires careful planning to ensure the data is retrieved without any loss or corruption.

- Transform: Rraw data needs to be transformed into a consistent format that can be easily understood and analyzed. This requires cleaning, validating, and applying business rules to data. Data transformation often entails considerable manipulation via filtering, sorting, merging, and splitting.

- Load: Finally, transformed data is loaded into a data repository like a data warehouse, where it is organized and made available for analysis. This stage often requires optimizing data structures and storage methods to ensure efficient data retrieval and reporting.

These three ETL stages are often executed concurrently, meaning that while data is being extracted, a transformation process could operate on acquired data to prepare it for loading. And rather than waiting for extraction to finish, a loading process can start on the prepared data.

What is ETL Testing?

The ETL testing process is pivotal in identifying and addressing data quality issues, such as missing or duplicate data, incorrect data types or formats, and data inconsistencies or discrepancies.

ETL testing is a systematic process for identifying and resolving data quality issues, inconsistencies, and errors that can occur during the ETL process. By uncovering and resolving issues before data reaches the data warehouse, ETL testing ensures data is trustworthy and reliable.

The primary goal is to ensure data integration meets the defined business requirements while giving businesses the confidence to use data for critical decision-making, strategic planning, and performance evaluation.

But how does ETL testing work?

The process typically begins with an understanding of business requirements, data sources, and target data warehouse schema. Testers then create a comprehensive plan that outlines the various scenarios, test cases, and expected results.

This testing plan can also include various types of ETL tests to ensure a thorough coverage of the data integration process.

Once a test plan is in place, testers write SQL queries or use specialized ETL testing tools to carry out tests. The objective here is to identify discrepancies between source and target data, validate data transformations, and ensure data integrity and referential integrity are maintained throughout the ETL process.

Testers often write complex queries to evaluate data across multiple tables, aggregate functions, and conditional statements. Additionally, testers may use data profiling techniques to analyze the quality and consistency of the data.

As each test is executed, testers document findings, including errors or inconsistencies discovered during testing. This is critical for communicating results to the dev team so they can make adjustments to address issues. Testers also use this documentation to update test cases and create regression tests for future ETL iterations.

Security is often a concern during ETL tests, and data masking techniques are often used to protect sensitive information. This also ensures compliance requirements are met.

What are the Different Types of ETL Testing?

Let’s take a look at some of the common types of ETL testing:

- Source-to-target data reconciliation: This involves comparing data from the source system to data in the target data warehouse, ensuring extraction and loading processes have been performed correctly.

- Data transformation testing: This validates data transformation rules have been accurately applied, and that transformed data aligns with the target data warehouse schema.

- Integration testing: This evaluates the end-to-end functionality of the ETL process, verifying that all components, including extraction, transformation, and loading, work together seamlessly

- Performance testing: This assesses the efficiency and speed of the ETL process, ensuring it meets the required performance benchmarks and can handle large volumes of data within the stipulated time frame.

- Functional testing: This focuses on the functionality of the ETL process, verifying it meets business requirements and produces desired outcomes.

- Unit testing: This examines individual components or modules of the ETL process, ensuring each functions correctly before integrating into the system

- ETL validation: This encompasses a series of checks performed throughout the ETL process, including source data validation, transformation validation, and target data validation, to confirm that the entire process is accurate and error-free

- Data Completeness Testing: This ensures that all expected data is extracted from the source systems and loaded into the target system.

- Data Accuracy Testing: This focuses on validating the accuracy and correctness of the transformed data by comparing values in the target system with expected values based on predefined business rules or transformations.

- Data Integrity Testing: This checks for data integrity in the target system by validating referential integrity constraints, primary key constraints, or other integrity rules are maintained during transformation and loading.

- Data Quality Testing: This assesses the quality of data being processed in the ETL pipeline by identifying and flagging data quality issues such as missing values, duplicates, invalid data formats, or quality standard violations.

- Error Handling Testing: This examines how the ETL system handles errors and exceptions during the ETL process by verifying error messages are logged correctly, and that error recovery mechanisms are in place.

- Reconciliation Testing: This compares data in the source system with data loaded into the target system to ensure consistency by validating record counts, data values, and aggregations.

- Metadata Testing: This focuses on validating metadata from ETL processes by verifying it accurately describes data sources, transformations, and mappings.

- Regression Testing: This ensures changes made to the ETL system do not introduce unintended side effects or break existing functionality by retesting the entire ETL process or specific components to validate behavior is as expected.

The ETL Testing Process

The ETL testing process typically involves several stages that ensure the thorough validation of the ETL system. While specific stages may vary depending on the project, let’s take a look at a general overview of the stages:

- Test Planning: Overall testing strategy and objectives for ETL testing are defined, including the scope of testing, test priorities, and creating a test plan.

- Test Design: Detailed test cases and test scenarios are designed based on the requirements and specifications of the ETL system.

- Test Environment Setup: A dedicated test environment is set up to simulate the production environment for ETL testing.

- Test Data Preparation: Test data is prepared to mimic real-world scenarios and cover various data types, formats, and volumes.

- Test Execution: Test cases are executed using prepared test data in the ETL testing environment. Test results are recorded, and issues are reported.

- Data Validation and Reconciliation: Data validation compares data in the target system with expected results or source system data. Reconciliation ensures data in the target system matches the source data.

- Error Handling and Exception Testing: Testers intentionally introduce errors, exceptions, or invalid data scenarios to evaluate how the system detects, handles, and recovers from such issues.

- Performance Testing: This evaluates the ETL system's performance and scalability, including speed, resource utilization, and handling large data volumes.

- Reporting and Documentation: Test results, including defects, issues, or deviations, are documented in a test report.

- Test Closure: ETL testing concludes with test closure activities, which involve reviewing test coverage, evaluating results, and assessing the readiness of the ETL system for production deployment.

ETL Testing Tools

ETL tools often play a significant role in ETL testing, as these apps are designed to facilitate and streamline processes for validating the integrity, accuracy, and performance of data pipelines during data integration and transformation.

Originally, organizations crafted these ETL tools in-house, but nowadays, there are numerous open-source and proprietary ETL to choose from.

These can include data profiling tools that help testers gain insights into data patterns, anomalies, and inconsistencies and test management tools to organize and track test cases, manage test execution, and monitor test progress.

Data comparison and validation tools can also be used to automate the process of comparing source and target data, speeding up the testing process and reducing the potential for human error.

In some cases, automated testing tools can also be used to further streamline the ETL testing process. These automatically generate test cases based on predefined rules or templates, execute tests and compare the results against the expected outcomes.

Let’s look at some of the more popular ETL testing tools.

RightData is a self-service ETL tool ideal for businesses with high-volume data platforms. Its UI is also super easy to use when validating or reconciling information between data sets.

You also get features likea universal query studio, custom rule builder, data quality metrics, and integration with CI/CD tools.

QuerySurge adds an extra layer of security to your data while also ensuing it remains intact within your data repository, greatly reducing the risk of data corruption.

You also get point-to-point testing, automated email reports, data validation via the query wizard, and more.

Datagaps' ETL Validator is ideal if you need to accommodate multiple data forms, as it can compare and analyze data schemas in various environments.

You also get useful features like a Drag-and-drop test builder, aggregate data comparisons, and a built-in ETL engine.

If you need comprehensive, end-to-end testing of data processes, iCEDQ is an excellent option. With a rule-based approach, you can simplify various processes, and it includes a built-in scheduler for triggering ETL testing rules.

Some other helpful features include an in-memory engine, a built-in dashboard, and advanced role-based control.

The Future of ETL Testing

As data volume, variety, and complexity continue to grow, efficient and effective ETL testing becomes increasingly important. In the future, we can expect several advancements in ETL testing driven by technological innovations, particularly in the field of artificial intelligence (AI).

AI-based techniques like machine learning and natural language processing (NLP) have the potential to revolutionize ETL testing by automating complex tasks, identifying patterns and anomalies in the data more efficiently, and predicting potential issues before they become critical.

This stands to significantly reduce the time and effort required for ETL testing, allowing testers to focus on more complex or higher-risk aspects of the data integration process. These advanced technologies can also streamline the process of generating and prioritizing test cases, improving the overall efficiency of the testing process.

Conclusion

In 2024 and beyond, data-driven decision-making will continue to be an essential aspect of modern business. And with organizations relying upon ETL pipelines to handle, integrate and extract value from diverse data streams, there’s an equally important need for ETL testing.

ETL testing is a vital component of modern data integration that can help verify the correct extraction, transformation, and loading of data while preventing data loss, corruption, and inconsistencies.

The significance of ETL testing is undeniable, as it directly impacts the reliability of data analysis and reporting, influencing an organization's ability to make informed decisions, seize opportunities, and tackle obstacles effectively.

And with the ever-growing adoption of AI technologies like ChatGPT, it’s fair to assume that AI will positively impact ETL testing in the near future. So if you want to stay ahead of the curve, integrating AI into your ETL testing processes will likely be inevitable.

Want to boost your software testing resume in 2024? Check out:

The Best Software Testing Certifications

Frequently Asked Questions

1. Is ETL Testing Easy?

This depends on the complexity of the data, the specific business requirements, and the ETL process. ETL testing might be relatively straightforward if you have experience in database testing, SQL, and data validation techniques. If you’re a beginner, you should expect to develop a solid understanding of data management and business intelligence.

2. Is SQL Required for ETL testing?

ETL testing is used to verify the integrity of structured data as it moves through an ETL process, and SQL is the most common language used to interact with databases. This means that SQL knowledge is essential for writing the queries in an ETL process, comparing source and target data, and validating data transformations.

3. Which Language Is Best for ETL?

There isn't a single "best" language for ETL, as the choice depends on various factors, such as the specific tools used, the existing infrastructure, and the skill set of the development team. Common languages used for ETL include SQL, Python, and Java.

People are also reading:

- QA Interview Questions and Answers

- What is Unit Testing?

- Best API Testing Tools

- Best Mobile Testing Tools

- Best A/B Testing Tools

- Software Testing Interview Questions and Answers

- Penetration Testing Certification

- Best Software Testing Certifications

- Best Software Testing Tools

- Best Software Testing Courses